LightGBM is a newer tool as compared to XGBoost. I have several qestions below.

Deciding On How To Boost Your Decision Trees By Stephanie Bourdeau Medium

We can use XGBoost to train the Random Forest algorithm if it has high gradient data or we can use Random Forest algorithm to train XGBoost for its specific decision trees.

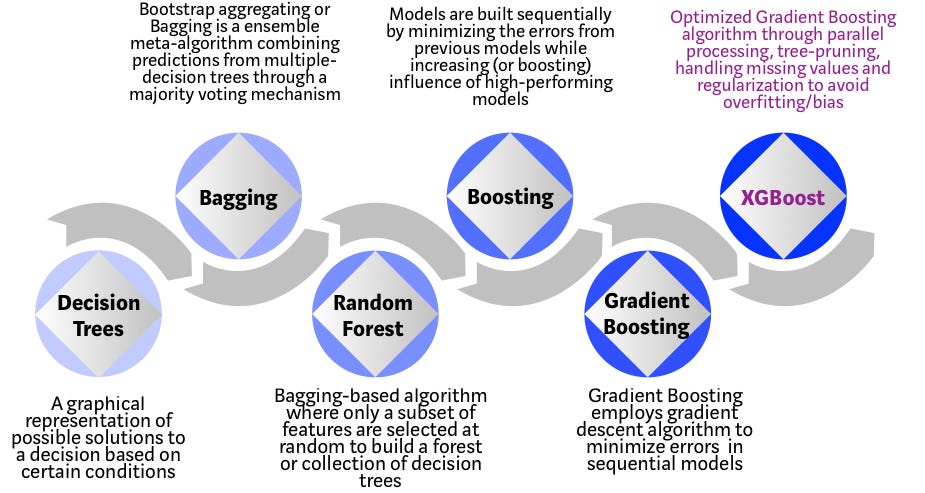

. The different types of boosting algorithms are. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. Gradient boosted trees consider the special case where the simple model h is a decision tree.

While regular gradient boosting uses the loss function of our base model eg. XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications. Answer 1 of 10.

Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of. Over the years gradient boosting has found applications across various technical fields. In this case there are going to be.

XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. A Gradient Boosting Machine. I think the Wikipedia article on gradient boosting explains the connection to gradient descent really well.

Differences between RandomForest and XGBoost. Both are boosting algorithms which means that they convert a set of weak learners into a single. Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor.

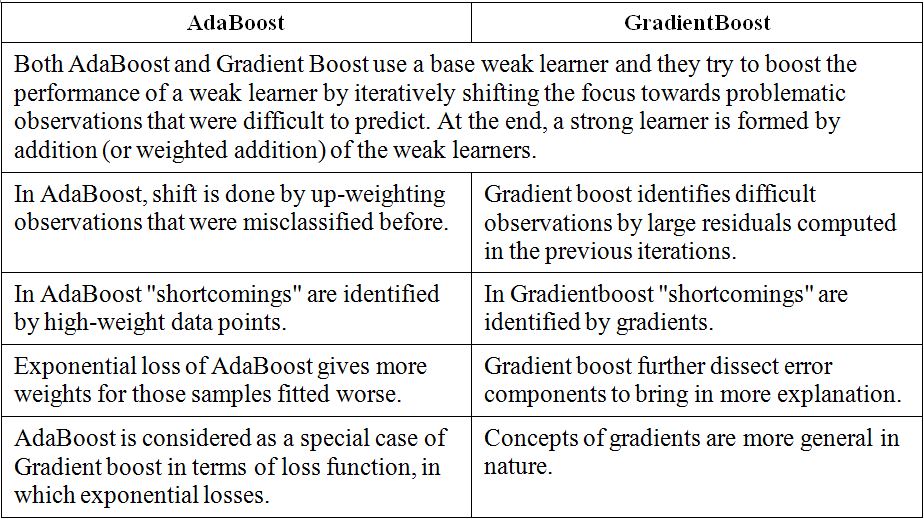

XGBoost is more regularized form of Gradient Boosting. Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. AdaBoost is the original boosting algorithm developed by Freund and Schapire.

AdaBoost is the shortcut for adaptive boosting. Decision tree as a proxy for minimizing the error of the overall model XGBoost uses the 2nd order derivative as an approximation. These algorithms yield the best results in a lot of competitions and hackathons hosted on multiple platforms.

Generally XGBoost is faster than gradient boosting but gradient boosting has a wide range of application. The gradient boosted trees has been around for a while and there are a lot of materials on the topic. It worked but wasnt that efficient.

Gradient boosting algorithm can be used to train models for both regression and classification problem. Answer 1 of 2. So whats the differences between Adaptive boosting and Gradient boosting.

XGBoost delivers high performance as compared to Gradient Boosting. Boosting is a method of converting a set of weak learners into strong learners. AdaBoost Gradient Boosting and XGBoost.

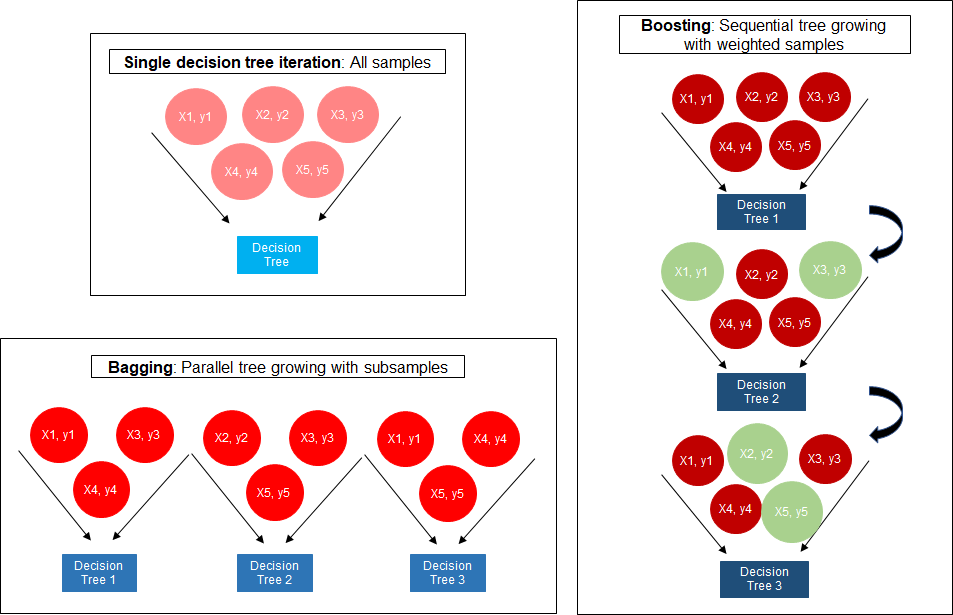

XGBoost computes second-order gradients ie. The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor. XGBoosts internal mechanism is fundamentally different as it includes gradient boosting whereas RandomForests.

Gradient Boosting is also a boosting algorithm hence it also tries to create a strong learner from an ensemble of weak learners. 2 And advanced regularization L1 L2 which improves model generalization. Gradient Boosting was developed as a generalization of AdaBoost by observing that what AdaBoost was doing was a gradient search in decision tree space aga.

I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog. AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. XGBoost and LightGBM are the packages belonging to the family of gradient boosting decision trees GBDTs.

Traditionally XGBoost is slower than lightGBM but it achieves faster training through the Histogram binning process. XGBoost trains specifically the gradient boost data and gradient boost decision trees. Here is an example of using a linear model as base learning in XGBoost.

GBM is an algorithm and you can find the details in Greedy Function Approximation. XGBoost is more regularized form of Gradient Boosting. Boosting algorithms are iterative functional gradient descent algorithms.

Lower ratios avoid over-fitting. Its training is very fast and can be parallelized distributed across clusters. The training methods used by both algorithms is different.

What are the fundamental differences between XGboost and gradient boosting classifier from scikit-learn. The algorithm is similar to Adaptive BoostingAdaBoost but differs from it on certain aspects. Its training is very fast and can be parallelized distributed across clusters.

8 Differences between XGBoost and LightGBM. XGBoost delivers high performance as compared to Gradient Boosting. Gradient boosting is a technique for building an ensemble of weak models such that the predictions of the ensemble minimize a loss function.

AdaBoost Adaptive Boosting AdaBoost works on improving the. Visually this diagram is taken from XGBoosts documentation. So having understood what is Boosting let us discuss the competition between the two popular boosting algorithms that is Light Gradient Boosting Machine and Extreme Gradient Boosting xgboost.

I learned that XGboost uses newtons method for optimization for loss function but I dont understand what will happen in the case that hessian is nonpositive-definite. XGBoost eXtreme Gradient Boosting is an advanced implementation of gradient boosting algorithm. XGBoost is short for eXtreme Gradient Boosting package.

XGBoost is an implementation of the GBM you can configure in the GBM for what base learner to be used. Extreme Gradient Boosting or XGBoost for short is an efficient open-source implementation of the gradient boosting algorithm. Mathematical differences between GBM XGBoost First I suggest you read a paper by Friedman about Gradient Boosting Machine applied to linear regressor models classifiers and decision trees in particular.

It can be a tree or stump or other models even linear model.

Comparison Between Adaboosting Versus Gradient Boosting Statistics For Machine Learning

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

Boosting Algorithm Adaboost And Xgboost

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

Mesin Belajar Xgboost Algorithm Long May She Reign

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

0 comments

Post a Comment